Back to articles

Back to articles

Back to articles

Back to articles

Introducing xTuring: Fast, Efficient, and Simple Fine-Tuning for LLMs.

Introducing xTuring: Fast, Efficient, and Simple Fine-Tuning for LLMs.

Published:Mar 28, 2023

10- min read

xTuring is an open source tool that allows you to create your own LLM with only three lines of code.

xTuring is an open source tool that allows you to create your own LLM with only three lines of code.

Overview

It's challenging for most people today to be able to feasibly implement a Large Language Model (LLM) for a custom application. To make an LLM that can generate highly accurate and fast text for niche domain topics or potentially to mimic a writing style, takes a lot of effort and knowledge.

We at Stochastic have worked on accelerating AI and fine-tuning for LLMs for a while now. We’re a team of Harvard researchers, ex-postdocs, and some talented ML engineers. And like you, we’re excited that language modeling has undergone a revolution in recent years with the development of large language models (LLMs) such as LLaMA, GPT-J, GPT-2, and more. People are looking for ways to innovate and create new applications with these models and open up new possibilities for applications such as automation of text deliverables, chatbots, language translation, and content generation. However, training and fine-tuning these models can be extremely costly and time-consuming.

git clone https://github.com/stochasticai/xturing.gitpip install xturingHow does xTuring work? How does it compare to other fine-tuning techniques?

One of the most significant advantages of xTuring is its ability to support both single and multi-GPU training, allowing users to fine-tune their models in a way that best suits their hardware resources.

xTuring also uses memory-efficient fine-tuning techniques like LoRA, which helps reduce hardware costs by up to 90% while also speeding up the training process. LoRA reduces the memory footprint required for fine-tuning, allowing users to train their models faster and more efficiently.

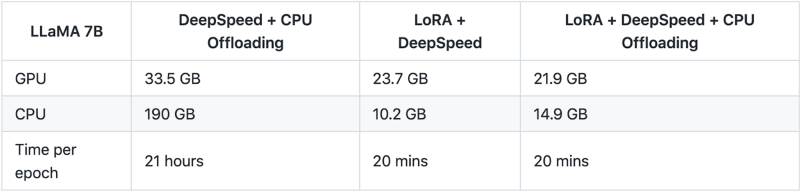

We benchmarked xTuring on the Alpaca dataset for fine-tuning and compared it to different fine-tuning techniques on the LLaMA 7B model to demonstrate the efficacy of xTuring. The dataset contains 52K instructions, and the tests were conducted on 4xA100 40GB GPU with 335GB CPU RAM.

The fine-tuning parameters were set to:

maximum sequence length: 512

batch size: 1

The results showed that fine-tuning the LLaMA 7B model with DeepSpeed + CPU offloading resulted in a memory usage of 33.5GB on the GPU and 190GB on the CPU, with a training time of 21 hours per epoch. In contrast, fine-tuning with LoRA + DeepSpeed and LoRA + DeepSpeed + CPU offloading resulted in significantly reduced memory usage, with 23.7GB and 21.9GB on the GPU, respectively. The CPU memory usage was also reduced, with 10.2GB and 14.9GB, respectively. Moreover, both LoRA + DeepSpeed and LoRA + DeepSpeed + CPU offloading achieved a faster training time of just 20 minutes per epoch.

Using xTuring is incredibly straightforward. The tool is designed to be user-friendly, with a simple and intuitive interface. Users can fine-tune their models with just a few clicks, and xTuring takes care of the rest. This ease of use makes xTuring an ideal tool for both beginners and advanced LLM users.

xTuring is a must-have tool for anyone who wants to fine-tune LLMs quickly, efficiently, and cost-effectively. With support for both single and multi-GPU training, memory-efficient techniques like LoRA, and a user-friendly interface, xTuring is the ultimate solution for fine-tuning large language models.

Check out xTuring now. Leave a star if you find it useful, we would greatly appreciate it 😉 .

Overview

It's challenging for most people today to be able to feasibly implement a Large Language Model (LLM) for a custom application. To make an LLM that can generate highly accurate and fast text for niche domain topics or potentially to mimic a writing style, takes a lot of effort and knowledge.

We at Stochastic have worked on accelerating AI and fine-tuning for LLMs for a while now. We’re a team of Harvard researchers, ex-postdocs, and some talented ML engineers. And like you, we’re excited that language modeling has undergone a revolution in recent years with the development of large language models (LLMs) such as LLaMA, GPT-J, GPT-2, and more. People are looking for ways to innovate and create new applications with these models and open up new possibilities for applications such as automation of text deliverables, chatbots, language translation, and content generation. However, training and fine-tuning these models can be extremely costly and time-consuming.

git clone https://github.com/stochasticai/xturing.gitpip install xturingHow does xTuring work? How does it compare to other fine-tuning techniques?

One of the most significant advantages of xTuring is its ability to support both single and multi-GPU training, allowing users to fine-tune their models in a way that best suits their hardware resources.

xTuring also uses memory-efficient fine-tuning techniques like LoRA, which helps reduce hardware costs by up to 90% while also speeding up the training process. LoRA reduces the memory footprint required for fine-tuning, allowing users to train their models faster and more efficiently.

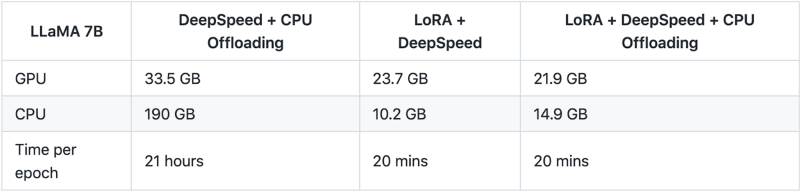

We benchmarked xTuring on the Alpaca dataset for fine-tuning and compared it to different fine-tuning techniques on the LLaMA 7B model to demonstrate the efficacy of xTuring. The dataset contains 52K instructions, and the tests were conducted on 4xA100 40GB GPU with 335GB CPU RAM.

The fine-tuning parameters were set to:

maximum sequence length: 512

batch size: 1

The results showed that fine-tuning the LLaMA 7B model with DeepSpeed + CPU offloading resulted in a memory usage of 33.5GB on the GPU and 190GB on the CPU, with a training time of 21 hours per epoch. In contrast, fine-tuning with LoRA + DeepSpeed and LoRA + DeepSpeed + CPU offloading resulted in significantly reduced memory usage, with 23.7GB and 21.9GB on the GPU, respectively. The CPU memory usage was also reduced, with 10.2GB and 14.9GB, respectively. Moreover, both LoRA + DeepSpeed and LoRA + DeepSpeed + CPU offloading achieved a faster training time of just 20 minutes per epoch.

Using xTuring is incredibly straightforward. The tool is designed to be user-friendly, with a simple and intuitive interface. Users can fine-tune their models with just a few clicks, and xTuring takes care of the rest. This ease of use makes xTuring an ideal tool for both beginners and advanced LLM users.

xTuring is a must-have tool for anyone who wants to fine-tune LLMs quickly, efficiently, and cost-effectively. With support for both single and multi-GPU training, memory-efficient techniques like LoRA, and a user-friendly interface, xTuring is the ultimate solution for fine-tuning large language models.

Check out xTuring now. Leave a star if you find it useful, we would greatly appreciate it 😉 .

Overview

It's challenging for most people today to be able to feasibly implement a Large Language Model (LLM) for a custom application. To make an LLM that can generate highly accurate and fast text for niche domain topics or potentially to mimic a writing style, takes a lot of effort and knowledge.

We at Stochastic have worked on accelerating AI and fine-tuning for LLMs for a while now. We’re a team of Harvard researchers, ex-postdocs, and some talented ML engineers. And like you, we’re excited that language modeling has undergone a revolution in recent years with the development of large language models (LLMs) such as LLaMA, GPT-J, GPT-2, and more. People are looking for ways to innovate and create new applications with these models and open up new possibilities for applications such as automation of text deliverables, chatbots, language translation, and content generation. However, training and fine-tuning these models can be extremely costly and time-consuming.

git clone https://github.com/stochasticai/xturing.gitpip install xturingHow does xTuring work? How does it compare to other fine-tuning techniques?

One of the most significant advantages of xTuring is its ability to support both single and multi-GPU training, allowing users to fine-tune their models in a way that best suits their hardware resources.

xTuring also uses memory-efficient fine-tuning techniques like LoRA, which helps reduce hardware costs by up to 90% while also speeding up the training process. LoRA reduces the memory footprint required for fine-tuning, allowing users to train their models faster and more efficiently.

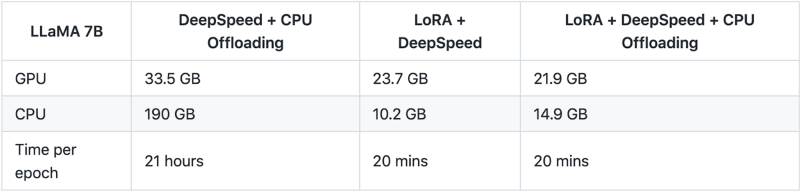

We benchmarked xTuring on the Alpaca dataset for fine-tuning and compared it to different fine-tuning techniques on the LLaMA 7B model to demonstrate the efficacy of xTuring. The dataset contains 52K instructions, and the tests were conducted on 4xA100 40GB GPU with 335GB CPU RAM.

The fine-tuning parameters were set to:

maximum sequence length: 512

batch size: 1

The results showed that fine-tuning the LLaMA 7B model with DeepSpeed + CPU offloading resulted in a memory usage of 33.5GB on the GPU and 190GB on the CPU, with a training time of 21 hours per epoch. In contrast, fine-tuning with LoRA + DeepSpeed and LoRA + DeepSpeed + CPU offloading resulted in significantly reduced memory usage, with 23.7GB and 21.9GB on the GPU, respectively. The CPU memory usage was also reduced, with 10.2GB and 14.9GB, respectively. Moreover, both LoRA + DeepSpeed and LoRA + DeepSpeed + CPU offloading achieved a faster training time of just 20 minutes per epoch.

Using xTuring is incredibly straightforward. The tool is designed to be user-friendly, with a simple and intuitive interface. Users can fine-tune their models with just a few clicks, and xTuring takes care of the rest. This ease of use makes xTuring an ideal tool for both beginners and advanced LLM users.

xTuring is a must-have tool for anyone who wants to fine-tune LLMs quickly, efficiently, and cost-effectively. With support for both single and multi-GPU training, memory-efficient techniques like LoRA, and a user-friendly interface, xTuring is the ultimate solution for fine-tuning large language models.

Check out xTuring now. Leave a star if you find it useful, we would greatly appreciate it 😉 .

Overview

It's challenging for most people today to be able to feasibly implement a Large Language Model (LLM) for a custom application. To make an LLM that can generate highly accurate and fast text for niche domain topics or potentially to mimic a writing style, takes a lot of effort and knowledge.

We at Stochastic have worked on accelerating AI and fine-tuning for LLMs for a while now. We’re a team of Harvard researchers, ex-postdocs, and some talented ML engineers. And like you, we’re excited that language modeling has undergone a revolution in recent years with the development of large language models (LLMs) such as LLaMA, GPT-J, GPT-2, and more. People are looking for ways to innovate and create new applications with these models and open up new possibilities for applications such as automation of text deliverables, chatbots, language translation, and content generation. However, training and fine-tuning these models can be extremely costly and time-consuming.

git clone https://github.com/stochasticai/xturing.gitpip install xturingHow does xTuring work? How does it compare to other fine-tuning techniques?

One of the most significant advantages of xTuring is its ability to support both single and multi-GPU training, allowing users to fine-tune their models in a way that best suits their hardware resources.

xTuring also uses memory-efficient fine-tuning techniques like LoRA, which helps reduce hardware costs by up to 90% while also speeding up the training process. LoRA reduces the memory footprint required for fine-tuning, allowing users to train their models faster and more efficiently.

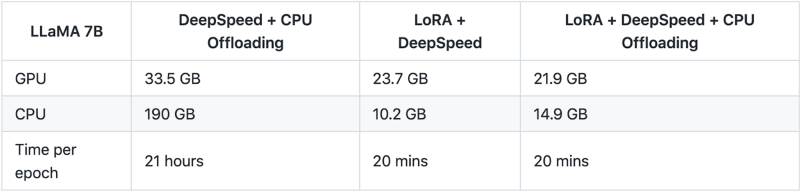

We benchmarked xTuring on the Alpaca dataset for fine-tuning and compared it to different fine-tuning techniques on the LLaMA 7B model to demonstrate the efficacy of xTuring. The dataset contains 52K instructions, and the tests were conducted on 4xA100 40GB GPU with 335GB CPU RAM.

The fine-tuning parameters were set to:

maximum sequence length: 512

batch size: 1

The results showed that fine-tuning the LLaMA 7B model with DeepSpeed + CPU offloading resulted in a memory usage of 33.5GB on the GPU and 190GB on the CPU, with a training time of 21 hours per epoch. In contrast, fine-tuning with LoRA + DeepSpeed and LoRA + DeepSpeed + CPU offloading resulted in significantly reduced memory usage, with 23.7GB and 21.9GB on the GPU, respectively. The CPU memory usage was also reduced, with 10.2GB and 14.9GB, respectively. Moreover, both LoRA + DeepSpeed and LoRA + DeepSpeed + CPU offloading achieved a faster training time of just 20 minutes per epoch.

Using xTuring is incredibly straightforward. The tool is designed to be user-friendly, with a simple and intuitive interface. Users can fine-tune their models with just a few clicks, and xTuring takes care of the rest. This ease of use makes xTuring an ideal tool for both beginners and advanced LLM users.

xTuring is a must-have tool for anyone who wants to fine-tune LLMs quickly, efficiently, and cost-effectively. With support for both single and multi-GPU training, memory-efficient techniques like LoRA, and a user-friendly interface, xTuring is the ultimate solution for fine-tuning large language models.

Check out xTuring now. Leave a star if you find it useful, we would greatly appreciate it 😉 .

Harvard Innovation Labs

125 Western Ave

Boston, MA 02163

Harvard Innovation Labs

125 Western Ave

Boston, MA 02163

Harvard Innovation Labs

125 Western Ave

Boston, MA 02163

Harvard Innovation Labs

125 Western Ave

Boston, MA 02163